Java code example to insert data from CSV to database

- Details

- Written by Nam Ha Minh

- Last Updated on 24 September 2019 | Print Email

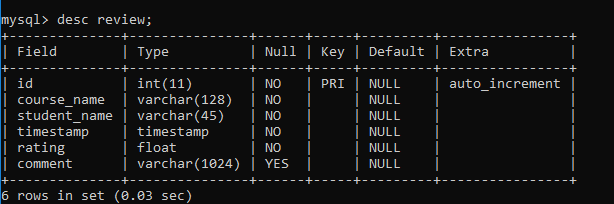

Course Name,Student Name,Timestamp,Rating,Comment Java Servlet JSP and Hibernate,Praveen Gurram,2019-07-31 19:10:13,5.0,excellent teaching Java Microservices Masterclass,Van Hoang Tran,2019-04-23 09:48:58,4.5, Python for Data Science,Dhara Patel,2019-06-18 02:50:17,5.0,Amazing experience after this course. Design Patterns in Java,Darshan Patel,2019-06-28 21:46:56,5.0,Great Experience. ...As you can see, the header (first line) indicates that there are 5 fields: course name, student name, timestamp, rating and comment. All fields must have values, except for comment which can be omitted (line 3 in the above CSV data).We need to import data from this CSV file to the review table in the database (MySQL), which has the following structure:

I will share with you two different ways of inserting data read from a CSV file to a database, according two types of CSV format: simple and complex.

I will share with you two different ways of inserting data read from a CSV file to a database, according two types of CSV format: simple and complex. 1. Java code example to insert data from CSV (simple format) to database

Suppose that data in the CSV file is simple: values are simply separated by commas. There’s no quotes, no commas in values, and the values do not span on multiple lines. So we can use pure Java File IO code with JDBC batch insert.Here’s the full code of an example program:// copyright by https://www.codejava.net

package net.codejava;

import java.io.*;

import java.sql.*;

public class SimpleCsv2DbInserter {

public static void main(String[] args) {

String jdbcURL = "jdbc:mysql://localhost:3306/sales";

String username = "user";

String password = "password";

String csvFilePath = "Reviews-simple.csv";

int batchSize = 20;

Connection connection = null;

try {

connection = DriverManager.getConnection(jdbcURL, username, password);

connection.setAutoCommit(false);

String sql = "INSERT INTO review (course_name, student_name, timestamp, rating, comment) VALUES (?, ?, ?, ?, ?)";

PreparedStatement statement = connection.prepareStatement(sql);

BufferedReader lineReader = new BufferedReader(new FileReader(csvFilePath));

String lineText = null;

int count = 0;

lineReader.readLine(); // skip header line

while ((lineText = lineReader.readLine()) != null) {

String[] data = lineText.split(",");

String courseName = data[0];

String studentName = data[1];

String timestamp = data[2];

String rating = data[3];

String comment = data.length == 5 ? data[4] : "";

statement.setString(1, courseName);

statement.setString(2, studentName);

Timestamp sqlTimestamp = Timestamp.valueOf(timestamp);

statement.setTimestamp(3, sqlTimestamp);

Float fRating = Float.parseFloat(rating);

statement.setFloat(4, fRating);

statement.setString(5, comment);

statement.addBatch();

if (count % batchSize == 0) {

statement.executeBatch();

}

}

lineReader.close();

// execute the remaining queries

statement.executeBatch();

connection.commit();

connection.close();

} catch (IOException ex) {

System.err.println(ex);

} catch (SQLException ex) {

ex.printStackTrace();

try {

connection.rollback();

} catch (SQLException e) {

e.printStackTrace();

}

}

}

}while ((lineText = lineReader.readLine()) != null) {

....

}Since the data is in simple format, we can safely read the values on each line by splitting the text by a comma like this:String[] data = lineText.split(",");

String courseName = data[0];

String studentName = data[1];

String timestamp = data[2];

String rating = data[3];

...And we use JDBC batch update feature to execute the SQL Insert statements in batches (each batch has size of 20 statements), for best performance:...

int batchSize = 20;

...

statement.addBatch();

if (count % batchSize == 0) {

statement.executeBatch();

}

...Also, JDBC transaction is used to make sure all data is inserted successfully or none executed at all.Note that to run the above program, you need to have MySQL JDBC driver JAR file available in the classpath. 2. Java code example to insert data from CSV (complex format) to database

Data in the CSV file can be more complex: having quotes, commas in values and values span on multiple lines. For example:Course Name,Student Name,Timestamp,Rating,Comment "Java Servlet JSP and Hibernate",Praveen Gurram,2019-07-31 19:10:13,5.0,"excellent teaching" "Java Microservices Masterclass",Van Hoang Tran,2019-04-23 09:48:58,4.5,"The course is great" "Python for Data Science",Dhara Patel,2019-06-18 02:50:17,5.0,"Amazing experience after this course. Thank you, instructor" "Design Patterns in Java",Darshan Patel,2019-06-28 21:46:56,5.0,"Great Experience, I love this course" ...In this case, you should use an external CSV library to handle all of these complexities, such as SuperCSV (open source).The following program illustrates how to use SuperCSV library to read data from a CSV file, and use JDBC batch update to insert data into the database to get best performance. Here’s the full code:

// copyright by https://www.codejava.net

package net.codejava;

import java.io.*;

import java.sql.*;

import org.supercsv.cellprocessor.Optional;

import org.supercsv.cellprocessor.ParseDouble;

import org.supercsv.cellprocessor.constraint.NotNull;

import org.supercsv.cellprocessor.ift.CellProcessor;

import org.supercsv.io.CsvBeanReader;

import org.supercsv.io.ICsvBeanReader;

import org.supercsv.prefs.CsvPreference;

public class ComplexCsv2DbInserter {

public static void main(String[] args) {

String jdbcURL = "jdbc:mysql://localhost:3306/sales";

String username = "user";

String password = "password";

String csvFilePath = "Reviews-complex.csv";

int batchSize = 20;

Connection connection = null;

ICsvBeanReader beanReader = null;

CellProcessor[] processors = new CellProcessor[] {

new NotNull(), // course name

new NotNull(), // student name

new ParseTimestamp(), // timestamp

new ParseDouble(), // rating

new Optional()// comment

};

try {

long start = System.currentTimeMillis();

connection = DriverManager.getConnection(jdbcURL, username, password);

connection.setAutoCommit(false);

String sql = "INSERT INTO review (course_name, student_name, timestamp, rating, comment) VALUES (?, ?, ?, ?, ?)";

PreparedStatement statement = connection.prepareStatement(sql);

beanReader = new CsvBeanReader(new FileReader(csvFilePath),

CsvPreference.STANDARD_PREFERENCE);

beanReader.getHeader(true); // skip header line

String[] header = {"courseName", "studentName", "timestamp", "rating", "comment"};

Review bean = null;

int count = 0;

while ((bean = beanReader.read(Review.class, header, processors)) != null) {

String courseName = bean.getCourseName();

String studentName = bean.getStudentName();

Timestamp timestamp = bean.getTimestamp();

double rating = bean.getRating();

String comment = bean.getComment();

statement.setString(1, courseName);

statement.setString(2, studentName);

statement.setTimestamp(3, timestamp);

statement.setDouble(4, rating);

statement.setString(5, comment);

statement.addBatch();

if (count % batchSize == 0) {

statement.executeBatch();

}

}

beanReader.close();

// execute the remaining queries

statement.executeBatch();

connection.commit();

connection.close();

long end = System.currentTimeMillis();

System.out.println("Execution Time: " + (end - start));

} catch (IOException ex) {

System.err.println(ex);

} catch (SQLException ex) {

ex.printStackTrace();

try {

connection.rollback();

} catch (SQLException e) {

e.printStackTrace();

}

}

}

}To use SuperCSV, you need to have the super-csv-x.x.x.jar file present in the classpath, by adding the following dependency in the pom.xml file (if you use Maven):<dependency> <groupId>net.sf.supercsv</groupId> <artifactId>super-csv</artifactId> <version>2.4.0</version> </dependency>Consult this tutorial to learn more how to read CSV files using SuperCSV. In the above program, we use the model class as follows:

package net.codejava;

import java.sql.Timestamp;

public class Review {

private String courseName;

private String studentName;

private Timestamp timestamp;

private double rating;

private String comment;

// constructors...

// getters and setters...

}And a custom cell processor class as follows:package net.codejava;

import java.sql.Timestamp;

import org.supercsv.cellprocessor.CellProcessorAdaptor;

import org.supercsv.cellprocessor.ift.CellProcessor;

import org.supercsv.util.CsvContext;

public class ParseTimestamp extends CellProcessorAdaptor {

public ParseTimestamp() {

super();

}

public ParseTimestamp(CellProcessor next) {

super(next);

}

@Override

public Object execute(Object value, CsvContext context) {

return Timestamp.valueOf((String) value);

}

}This class is need to parse the timestamp value in the CSV file. 3. Performance Test Result

The above sample programs were tested with MySQL database server running on the same computer. They perform well with CSV files containing around one million records. For your reference, the following table shows the result of my performance test:

# records

|

CSV File Size |

Java Only |

SuperCSV |

100 | 16 KB | 1,372 ms | 1,392 ms |

1,000 | 167 KB | 2,224 ms | 2,540 ms |

10,000 | 1.67 MB | 6,615 ms | 6,979 ms |

100,000 | 16.7 MB | 38,017 ms | 35,379 ms |

1,000,000 | 167 MB | 338,771 ms | 347,841 ms |

Related Tutorials:

Other Java Coding Tutorials:

- How to display images from database in JSP page with Java Servlet

- How to implement remember password (remember me) for Java web application

- How to code login and logout with Java Servlet, JSP and MySQL

- How to Code Hit Counter for Java web application

- 10 Common Mistakes Every Beginner Java Programmer Makes

- 10 Java Core Best Practices Every Java Programmer Should Know

- How to become a good programmer? 13 tasks you should practice now

- How to calculate MD5 and SHA hash values in Java

- Java File Encryption and Decryption Example

About the Author:

Nam Ha Minh is certified Java programmer (SCJP and SCWCD). He began programming with Java back in the days of Java 1.4 and has been passionate about it ever since. You can connect with him on Facebook and watch his Java videos on YouTube.

Nam Ha Minh is certified Java programmer (SCJP and SCWCD). He began programming with Java back in the days of Java 1.4 and has been passionate about it ever since. You can connect with him on Facebook and watch his Java videos on YouTube.

Comments

The count variable should be incremented in the loop. It was missing in the code.

Thanks for sharing this great article. really well done. It does help to beginners like me.

QQ, how do you read multiples files with in the same code? and insert into multiple tables.

you forgot to increment count variable.